[Update – Until there is correct compatibility of OPEN-CV with the new Raspberry Pi ‘Bullseye’ OS I highly recommend at this stage flashing and using the previous Raspberry Pi ‘Buster’ OS onto your Micro-SD for use with this guide – Official 'Buster' Image Download Link Here]

Furthering my quest for complete knowledge on artificial intelligence with Raspberry Pi the natural next step was to investigate Pose Recognition (Human Keypoint Detection) and Face Masking with the formidable Raspberry Pi single-board computer.

Ever wondered how Snapchat filters work? Three words...Facial Landmark Recognition. How is Facial Landmark Recognition (Masking) different than Face Identification? Two practical differences. Mask tracking will create dots and segments all across your face (thus it will know exactly where your eyes are in relation to your eyebrows) and it will only focus on your face and nothing else. (Whereas Face Identification creates a box around your head and determines that anything inside that box is a face. It doesn't know where your chin is and it will think that background behind you is also 'human face'). Pose estimation on the other hand is the task of using a machine-learned model to estimate the pose of a person from an image or a video by estimating the spatial locations of key body joints (referred to as keypoints).

Follow through this guide and you will know exactly how to do this so you can set up a similar system in your Maker-verse that suits your particular project. See the contents of the guide below.

- What You Need

- Initial Set-Up and Install Process

- Functional Pose Tracking Script

- Functional Face Mask Script

- Where to Now (GPIO Control and Other Applications)

- Setting Up Open-CV on Raspberry Pi 'Buster' OS

- Download Scripts

Machine and deep learning have never been more accessible, and this guide will be a demonstration of this. The two systems built here will use Open-CV library. This is a huge resource that helps solve real-time computer vision and image processing problems. If you are interested in more Open-CV check out the guides Raspberry Pi and Facial Recognition, Object and Animal Recognition with Raspberry Pi, Speed Camera With Raspberry Pi, Scan QR codes with Raspberry Pi, Hand Recognition and Finger Identification with Raspberry Pi, Hand Recognition Finger Identification or Face and Movement Tracking with a Pan-Tilt System. See an image below of the Pose Tracking and Face Mask Softwares running on the Raspberry Pi 'Buster' OS when using a Raspberry Pi 4 Model B with High-Quality Camera Module and a 5mm lens.

Find at the bottom of this page the process to install Open-CV to your Raspberry Pi. All scripts explored here will utilise the Python Programming Language (come check the Python Workshop if you need it). OpenCV and MediaPipe combine together to make the scripts work. MediaPipe and the TensorFlow that it creates is how we can get pose detection to operate and the facial landmark detection algorithm to work. MediaPipe has the most powerful facial landmark dataset that will run on the Raspberry Pi.

As always if you have got any questions, queries, a hand, or things you'd like to see added please let us know your thoughts! Either write a comment or create a forum post.

What You Need

Below is a list of the components you will need to get this system up and running real fast.

- Raspberry Pi 4 Model B (Having the extra computing power 'oomph' that the Pi provides is super helpful for this task but this set-up will work with a Raspberry Pi 3 Model B it'll just be a little slower)

- Raspberry Pi High-Quality Camera and Camera Lens (You can also use a Raspberry Pi Official Camera Module V2)

- Micro SD Card

- Power Supply

- Monitor

- HDMI Cord

- Mouse and Keyboard

Initial Set-Up and Install Process

Have the Raspberry Pi connected as a desktop computer with it connected to a monitor, mouse, and keyboard. Make sure a Pi Camera is installed in the correct slot with the ribbon cable facing the right way and start the Open-CV install process seen below. With that complete, you will have Open-CV installed onto a fresh version of Raspberry Pi 'Buster' OS. Then open up the Raspberry Pi Configuration menu (found using the top left Menu and scrolling over preferences) and enable the Camera found under the Interfaces tab. After enabling restart your Raspberry Pi to lock in this change. See the image below for the setting location.

Once that is complete the next step is to open up a new Terminal using the black button on the top left of the screen. Below you can see what it looks like when you open a Terminal on the Raspberry Pi 'Buster' Desktop and in that image there is a big arrow pointing to the icon which was clicked with the mouse to open it.

Now we will type and enter each of the below lines to install the necessary packages into the terminal window. Press | Y | on your keyboard when it requires you to do so to continue the installation of these packages.

sudo apt-get update && sudo apt-get upgrade

sudo apt-get install python-opencv python3-opencv opencv-data

sudo pip3 install mediapipe-rpi3

sudo pip3 install mediapipe-rpi4

sudo pip3 install gtts

sudo apt install mpg321

Functional Pose Tracking Script

With that done let's get into it. Below is the script we will use to get our Pose Tracking system up and running. Find and download it at the bottom of this page. When running it will identify human bodies. Then when a human body is identified it will then place dots all over the important joints and face of the identified human. These dots are also referred to as landmarks all have X, Y Coordinates associated with them. This means, in much a similar vein like the Raspberry Pi Hand Tracking, you will be able to identify when certain joints are above or below others. This change can then be used as a counter to track certain movements.

#Import all important functionality

import cv2

import mediapipe as mp

#Start cv2 video capturing through CSI port

cap=cv2.VideoCapture(0)

#Initialise Media Pipe Pose features

mp_pose=mp.solutions.pose

mpDraw=mp.solutions.drawing_utils

pose=mp_pose.Pose()

#Start endless loop to create video frame by frame Add details about video size and image post-processing to better identify bodies

while True:

ret,frame=cap.read()

flipped=cv2.flip(frame,flipCode=1)

frame1 = cv2.resize(flipped,(640,480))

rgb_img=cv2.cvtColor(frame1,cv2.COLOR_BGR2RGB)

result=pose.process(rgb_img)

#Print general details about observed body

print (result.pose_landmarks)

#Uncomment below to see X,Y coordinate Details on single location in this case the Nose Location.

#try:

# print('X Coords are', result.pose_landmarks.landmark[mp_pose.PoseLandmark.NOSE].x * 640)

# print('Y Coords are', result.pose_landmarks.landmark[mp_pose.PoseLandmark.NOSE].y * 480)

#except:

# pass

#Draw the framework of body onto the processed image and then show it in the preview window

mpDraw.draw_landmarks(frame1,result.pose_landmarks,mp_pose.POSE_CONNECTIONS)

cv2.imshow("frame",frame1)

#At any point if the | q | is pressed on the keyboard then the system will stop

key = cv2.waitKey(1) & 0xFF

if key ==ord("q"):

break

And just check out below what it looks like in the preview window that opens up when you run the above script. It will create a framework and overlay it wherever it believes it can see a human body. Also of note, this script identifies the general face and eye locations on your head. It also outputs to the shell a number (between 0 and 1) that represents visibility. If only a part of your body is seen then that visibility number might be around 0.1.

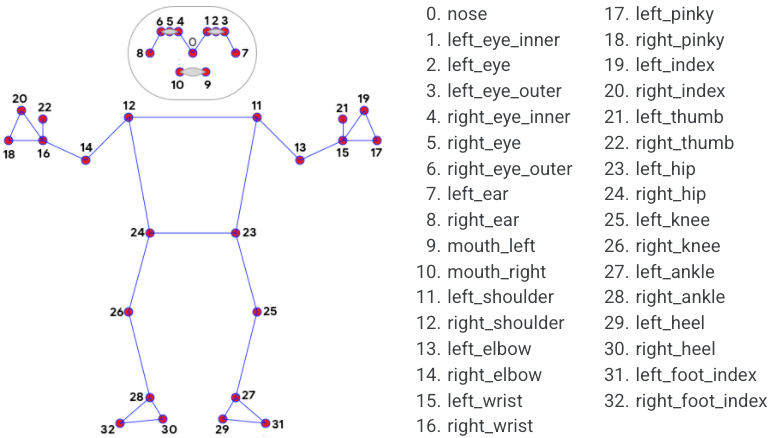

In a similar manner to how we could identify and gain live X-Y data points on particular joints in the human hand (as seen in this section of Hand Recognition with Raspberry Pi) we can do the same here for body parts. See below for the diagram showing how each part of the human body has been labelled and numbered for use in code by MediaPipe. So if you were interested in knowing the exact X, Y Coordinates of an individual's nose you would use the index number | 0 | or refer to it by its full name | NOSE |. So if you take the above script, uncomment the particular section and then save and run it, it will spit out the X and Y coordinates of any identified noses to the shell. Interestingly, it also appears to predict the location of the nose when it is even not on the screen.

Functional Face Mask Script

Below is the script we will use to get our Face Mask system up and running. Find and download it at the bottom of this page. This will identify faces and when it does so it'll map a whole bunch of points all across the identified face. These points (also referred to as landmarks) all have X, Y coordinates associated with them. This deep learning system here has more landmarks than 68 facial landmarks (this one actually has 468 points) and runs at an FPS greater than 10 on the Raspberry Pi 4 Model B.

import cv2

import sys, time

import mediapipe as mp

mp_drawing = mp.solutions.drawing_utils

mp_face_mesh = mp.solutions.face_mesh

# For static images:

drawing_spec = mp_drawing.DrawingSpec(thickness=1, circle_radius=1)

face_mesh = mp_face_mesh.FaceMesh(static_image_mode=True, max_num_faces=1, min_detection_confidence=0.5)

def get_face_mesh(image):

results = face_mesh.process(cv2.cvtColor(image, cv2.COLOR_BGR2RGB))

# Print and draw face mesh landmarks on the image.

if not results.multi_face_landmarks:

return image

annotated_image = image.copy()

for face_landmarks in results.multi_face_landmarks:

#print(' face_landmarks:', face_landmarks)

mp_drawing.draw_landmarks(

image=annotated_image,

landmark_list=face_landmarks,

connections=mp_face_mesh.FACEMESH_CONTOURS,

landmark_drawing_spec=drawing_spec,

connection_drawing_spec=drawing_spec)

#print('%d facemesh_landmarks'%len(face_landmarks.landmark))

return annotated_image

font = cv2.FONT_HERSHEY_SIMPLEX

cap = cv2.VideoCapture(0)

if (cap.isOpened() == False):

print("Unable to read camera feed")

cap.set(cv2.CAP_PROP_FRAME_WIDTH, 640)

cap.set(cv2.CAP_PROP_FRAME_HEIGHT, 480)

while cap.isOpened():

s = time.time()

ret, img = cap.read()

if ret == False:

print('WebCAM Read Error')

sys.exit(0)

annotated = get_face_mesh(img)

e = time.time()

fps = 1 / (e - s)

cv2.putText(annotated, 'FPS:%5.2f'%(fps), (10,50), font, fontScale = 1, color = (0,255,0), thickness = 1)

cv2.imshow('webcam', annotated)

key = cv2.waitKey(1)

if key == 27: #ESC

break

cap.release()

Below you can see what the live preview window looks like when the above script is running and it can see a face. It will throw on a whole mapping framework over what it believes to be a face.

Where to Now (GPIO Control and Other Applications)

There are heaps of places you can take either of these scripts. You can 'teach' the system what a push-up or bicep curl looks like (based on what joints are above and below during those actions) and you can use the pose tracking to keep track of how many reps you are doing. You could also make sure someone is still in front of the camera based on the visibility factor, if they ever try to hide from the camera it could then set off an alarm. You also have the ability to create an 'improve your posture' nag machine. Whenever you start to slouch an annoying beeper will trigger forcing you to sit up straight. Also, if you want to gain more three-dimensional data from your pose tracking system take a look at PoseNet architecture. 3D PoseNet predicts 3D poses from the concatenated 2D pose and depth features.

Face Mask script can be expanded upon to do all kinds of things. Virtual-tubers (vtubers) rejoice and the ability to create Custom Snapchat filters abound. You could also do basic Emotion Detection with this setup. That way if your Raspberry Pi detects that you're feeling down it can play your favourite tune to cheer you up! Or you could create a drowsiness detection Raspberry Pi System to improve safety. By focusing on the distance between the eyes (up and down) you can keep track of how sleepy you are getting. No matter what you do, hopefully, this has inspired some creative Raspberry Pi projects!

So how is Facial Landmark Recognition different than Face Identification? The region of interest is much tighter and accurate with Face Masking. Face Masking literally only focuses attention on your face and nothing else. This means Face Masking can be used to determine the orientation of your head whereas face recognition will only be able to tell you, with a factor of confidence, that somewhere inside its bounding box there is a face. Face Recognition thus won't be able to determine what within the Bounding Box is the background and what is the human face.

Setting up OPEN-CV on Raspberry Pi 'Buster' OS

This is an in-depth procedure to follow to get your Raspberry Pi to install Open-CV that will work with Pose and Face Mask Tracking Scripts talked about above. Soon I will create either a script/a separate tutorial to streamline this process. Turn on a Raspberry Pi 4 Model B running a fresh version of Raspberry Pi 'Buster' OS on a Micro-SD card inserted and connect your system to the Internet.

Open up the Terminal by pressing the Terminal Button found on the top left of the button. Copy and paste each command into your Pi’s terminal, press Enter, and allow it to finish before moving onto the next command. If ever prompted, “Do you want to continue? (y/n)” press Y and then the Enter key to continue the process.

sudo apt-get update && sudo apt-get upgrade

We must now expand the swapfile before running the next set of commands. To do this type into terminal this line.

sudo nano /etc/dphys-swapfile

The change the number on CONF_SWAPSIZE = 100 to CONF_SWAPSIZE=2048. Having done this press Ctrl-X, Y, and then Enter Key to save these changes. This change is only temporary and you should change it back after completing this. To have these changes affect anything we must restart the swapfile by entering the following command to the terminal. Then we will resume Terminal Commands as normal.

sudo apt-get install build-essential cmake pkg-config

sudo apt-get install libjpeg-dev libtiff5-dev libjasper-dev libpng12-dev

sudo apt-get install libavcodec-dev libavformat-dev libswscale-dev libv4l-dev

sudo apt-get install libxvidcore-dev libx264-dev

sudo apt-get install libgtk2.0-dev libgtk-3-dev

sudo apt-get install libatlas-base-dev gfortran

sudo pip3 install numpy

wget -O opencv.zip https://github.com/opencv/opencv/archive/4.4.0.zip

wget -O opencv_contrib.zip https://github.com/opencv/opencv_contrib/archive/4.4.0.zip

unzip opencv.zip

unzip opencv_contrib.zip

cd ~/opencv-4.4.0/

mkdir build

cd build

cmake -D CMAKE_BUILD_TYPE=RELEASE \

-D CMAKE_INSTALL_PREFIX=/usr/local \

-D INSTALL_PYTHON_EXAMPLES=ON \

-D OPENCV_EXTRA_MODULES_PATH=~/opencv_contrib-4.4.0/modules \

-D BUILD_EXAMPLES=ON ..

make -j $(nproc)

This | make | Command will take over an hour to install and there will be no indication of how much longer it will take. It may also freeze the monitor display. Be ultra patient and it will work. Once complete you are most of the way done. If it fails at any point and you receive a message like | make: *** [Makefile:163: all] Error 2 | just re-type and enter the above line | make -j $(nproc) |. Do not fear it will remember all the work it has already done and continue from where it left off. Once complete we will resume terminal commands.

sudo make install && sudo ldconfig

sudo reboot

Download Scripts

Below is all the code you need to get up and running with these examples above. You can easily alter them to do exactly what you want for your purposes.