Related Content

Transcript

That right there is my heartbeat being measured in real time by an AI enhanced camera.

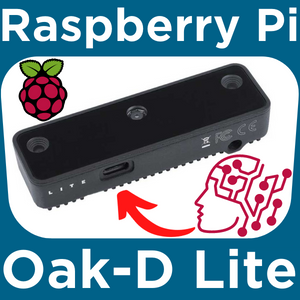

Hey gang, Tim here at Core Electronics and today we are cracking open the world of machine learning on the edge, with a Raspberry Pi and an Oak-D-Lite. By the end of this guide you are going to have a Raspberry Pi Single Board Computer with a spatial AI camera capable of keeping up with the most current advances in edge machine learnt technologies, so lets crack on in.

Edge computing is the idea of pushing computing and data closer to where they're used, which really means no cloud computing and relying only on the hardware on site. The Raspberry Pi 4 Model B is the gold standard of single board computers, but it could do with some extra computing when it comes to performing machine learning scripts. This is where the OCD Light with its internal suite of AI circuitry comes in. Not only will it perform calculations for the Pi, it also packs three front facing cameras. The two edge mounted stereo cameras that are pointed forward enable the OCD Light to create depth maps and determine accurately the distance to identified objects.

Imagine the OCD Light as the Leatherman multi-tool of the machine learning world. So, if you've ever needed a performance boost while running machine learnt AI systems like facial recognition with a Raspberry Pi single board computer, then the OCD Light is the solution for you. OCD modules can run multiple neural networks simultaneously for visual perception tasks like object detection, image classification, pose estimation and text recognition, and a whole bunch more whilst performing accurate depth estimation in real time.

This guide will demonstrate exactly how this kind of AI processing can be harnessed by the everyday maker whilst allowing a peek in the programming behind the scenes.

Back room to see and understand exactly what levers are there to adjust on the table for me is everything you need to set up your Raspberry Pi to work with the OD Light Camera Module. We are using a Raspberry Pi 4 Model B 2 Gigabyte, but the OD Light can run with earlier, lower spec Pies like the Raspberry Pi 3. You can even use a Raspberry Pi Zero, but keep in mind it may take some tinkering. Hit up the full write-up for knowledge on this link down below.

Naturally, you're going to need an OD Light Camera Module. Very importantly, you're going to need a USB 3.0 to USBC cord or better. This device does not come with a USBC cord. Make sure not to use a USB 2.0 to USB C cord as this potentially won't provide enough power or allow the data transfer. We also want a tripod to mount the OD Light onto. Finally, you will need everything to run this Raspberry Pi as a desktop computer. To be clear, I'm using Raspberry Pi OS Bullseye Linux version 11.

We will now build up the hardware. Firstly, here is what you're presented with when you have the OD Light U in hand. With it out of the box and the protective plastics removed, you can now start connecting it to a tripod and the Raspberry Pi single block computer. Start by screwing the OD Light into the tripod like so. Connect up the OD Light USBC connection using your USBC to USB 3.0 or better rated chord into the bottom plug in the middle of the Raspberry Pi 4 Model B board. This connection on the Raspberry Pi 4 Model B is the bottom USB 3.0 on the receiving end. This cord is going to provide power to the OD Light and the circuitry inside it as well as facilitating data transfer back and forth between these two systems.

The next step is to plug in all the connections to your Raspberry Pi 4 Model B as you would setting up a desktop computer. Check the description.

I plugged in the keyboard and mouse to the USB 2.0 USB hub so that way it won't take any power from the USB 3.0 hub which is going to be powering our hungry OD light. Then, we're going to finish off by providing power to the system through the USBC power supply. With the hardware setup complete, it's time to turn attention to the software.

Now, with your system rebooted and connected to the internet, it's going to look exactly like this. This is a machine learning guide, so we will now need to install the packages to make this camera module work with the Raspberry Pi. Open a new terminal window and inside the terminal window, copy and paste from the full written up article each terminal command one by one. Just check the links in the description, allow each one to finish before moving on to the next command. If ever prompted, do you want to continue, simply press 'y' and then the enter key on your keyboard. This will continue the process. This procedure should take less than 25 minutes.

With that last line completed, we have fully set up our Raspberry Pi Single-Board Computer to work with the OD light camera module and all the AI scripts that I'm going to demonstrate today are now on our system. Luxon has created a nifty graphical user interface for their OD light cameras and Python examples. They can now be opened up and utilized. To do so, we're going to open up a new terminal window using the black button on the top left of the screen. From here, type and enter the following line to open up a new Thonny IDE with full permissions:

`sudo funny`

As soon as you press enter, this line will run and open up a new Thonny IDE which has sudo level privileges. Running Sony IDE this way will stop any issues with Python scripts that are not on the default user path.

Using the top toolbar of Thonny IDE, we can open our newly downloaded GUI Python script by pressing the load button. This opens a file browser, and the file we're looking for is located in the directory home/pi/depth_ai and is named depth_ai_demo.py. As soon as we press the green button, the GUI system will start opening up. It will automatically start piping extra packages and machine learnt systems that the camera module needs from the Raspberry Pi.

From here, we can jump between 14 different machine learnt systems while constantly showing a preview window which can be swapped between with all the three cameras. It also adds heaps of camera tweaks that we can quickly adjust on the fly with toggles and sliders. When utilizing applicable AI systems, we're also going to be able to add depth AI layers.

It is worth noting that running the machine learnt system this way is slower than running the machine learnt Python scripts directly. However, it is a great way to test and experiment to find the optimal values which can then be hard coded into the main Python scripts. For most of the systems, the FPS will be counted at the top left of the preview window.

To see other previews from the device, we can use the preview switcher that is visible at the top left section of the GUI. The model that comes up first by default is the MobileNet V2 SSD. On the top right, there are four different tabs starting off at the AI. Here, we can see a toggle to turn the machine loading on or off. It's worth noting here that the OAK-D Light makes for a formidable 4K web camera.

CNN model allows us to swap between the AI models. Let's jump to face detection, retail, and then click apply and restart. CNN stands for Convolutional Neural.

Network

The next item on the list is Shapes. By increasing the shave's, we enable the Oak D Light to run neural network calculations faster by unlocking computational power. With the Oak D Light, it allows me to go all the way up to 12.

Next on the list is Model Source and this determines which camera's data, be it the left, right or middle, will have the neural network analysis performed to it. Full FOV will either scale down the camera feed or if unticked will crop the image to meet the neural network aspect ratio. That one by one advanced options are here too which can be accessed by clicking this tick box.

But let's dive into the next tab depth. The first thing you should look at is the toggle to turn depth on or off. This can run in conjunction with other AI scripts as you can see happening right now as this is turned on and it's identified my face. It then tells me the distance it is from the camera. Currently, I'm around one meter away and if I step further back, you'll see it increase. It's very clever what two stereo cameras can achieve.

Now there's a lot of sliders and toggles and they might seem advanced but the really useful ones to know are disparity, sub pixel and extended disparity. Turn disparity on if you want the Oak D Light to perform the depth calculations instead of the Raspberry Pi. Let's do that right now. Before we were sitting at around 15.3 FPS and now we're sitting at around 16 and that's because we've offloaded the responsibility to our camera module away from the Raspberry Pi.

Next is Sub Pixel. Turn this on if you want to measure long range distances more than a couple of meters with this camera module. Turn on Extended Disparity if you want to measure things that are really close without it getting confused at around less than 30 centimetres away from the Oak D Light.

We can also set a confidence threshold here. The higher the confidence threshold, the more the machine alert system has to be sure - 100% sure - that what it sees is what it sees. For instance, you can see the confidence threshold going up and down a little bit right here. If I crank this all the way up, it would only accept my face if it was a hundred percent sure.

If we then jump into the camera tab and open up the advanced options, here you're going to see all those normal sliders and toggles that you'd expect to see. And finally, in miscellaneous, you're going to find video recordings and ways to set up auto reporting from the OD Lite. There are also some webcam settings in here too.

I'll jump through some machine alert systems now to give you a highlight reel. Know that every piece of code that it takes to run these is open source and will run directly on the physical system in front of me. Now you're just gonna have to see this to believe. Come check the directory slash home slash depth ai dash experiments. In here, in each folder is a unique machine learnt system that we can run.

Having run through the demo GUI, let's now explore some of the experimental Luxonis codes. All of this machine learned content is open source and honestly it's pretty staggering. The folder titles give great indication to the machine learnt systems, so take some time to look over.

We're going to monitor our heartbeat remotely using only a camera. You can find this Python script under the directory home slash Pi heart rate underscore estimation dash main slash heart rate underscore estimation dash main and it's called main.py. We will need to alter two lines in the setup. You want to comment out this line down here. This line locks the camera at a very close vocal point and we don't really want that. We want the camera to be able to.

Focus wherever the humans are. The next thing to keep note of is probably the most powerful lever or control that you have over this, which is the pixel threshold number. By default, this is 1.5 to 2. If you're in a particularly bright environment, you want to crank this number up and if you're in a particularly dark environment, you want to crank this number down. This will help us accommodate for environments with different lighting and get more accurate results. With those two things done, just press the big green run button as normal. A video preview window will open up just like before. It will then start tracking human faces. With that preview window selected, press S on your keyboard and the heart rate estimation will start. It will take a little bit of time to figure out the beats per minute.

The way this system works is as follows. It starts by focusing and locking onto the human face. It then hyper focuses on that forehead of the human face. It then uses edge detection image manipulation to hyper exaggerate color and edge changes. This then means it can pick up on the ultra subtle pumps of the veins in the forehead. It then uses a machine learnt system to identify these changes which is occurring at the same rate as your heartbeat and that is how it determines your beats per minute.

Modern AI systems have a bad rap for destroying privacy, but what if we turned that whole concept on its head and instead made AI systems that encourage privacy? So let's use a face detection to enact AI auto face blurring. You can find the main.py python script under the directory home/pi/depthAI/gen2-blur-basis. We can open it and run it using the same pseudo-thony IDE instance.

Soon as you do a live camera preview window will pop up and as you can see, as soon as it identified my face it blurs the screen which is pretty nice. This system works with multiple faces and this camera module crushes, that's why so many organizations and open source magnates are jumping onto the bandwagon. One of those intrepid organizations is Coretech Technologies based in Canada. They created a machine learning system that will take gesture controls and allow you to manipulate STL CAD files that are projected into artificial reality in front of you.

Importantly, you can find it under the directory home/pi_vision_ui named main.py. Open up this script using a normal IDE and press run in the same way as before. As soon as you run it, you're going to see a window preview with an object in front of you represented in VR. Here is the size manipulation as determined by the distance between my index finger and thumb. Finally, you can rotate the object by showing both your hands to the camera and then rotating them. It's pretty neat, just imagine whipping that out during your next Zoom meeting when you're evaluating a project design. They'll be talking about it for days!

The Oak D Light is a remarkable tool and there is so much more worth exploring. Road masking for self-driving cars or safety AI systems to one of human proximity to dangerous moving equipment are created machine learning systems that can run on the edge. With these technologies, with a completed project you would end up with a formidable supervisor that never sleeps or blinks.

And that's really it for today. You now have all the knowledge to tackle head on the current world of applied edge AI machine learnt systems. The Oak D Lite is an extraordinary product, particularly in combination with the best single board computer.

In existence, the Raspberry Pi is a full-time maker's dream. If you have any questions, write me a message down below. We're always happy to help, so as soon as I'm done here, I'm going to plug that OD light back in and get it to keep tabs on me as I continue on doing my thing. So, until next time, stay cozy! You.

Comments

Makers love reviews as much as you do, please follow this link to review the products you have purchased.